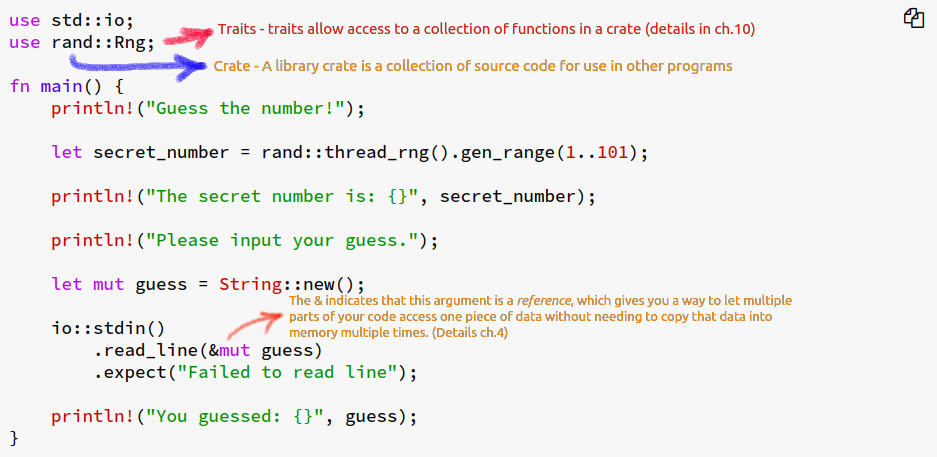

Despite the rapid advancement of tools and specifications, web development has pretty much involved the same set of actions for a developer.

It all starts with an index.html file. An entire decade has been spent on just creating different ways to generate this file. From writing each line of HTML by hand to creating it on the fly using a shadow DOM we have come a long way.

Then comes the data that sits on these HTML files and followed by the interaction. The data flows from a data-source. Usually a database. The developer’s first task is to figure out a way to get this data from the database on the HTML. This is where a lot of action goes in. So much so that the quintessential phrase “Web Developer” has been replaced by “Frontend” and “Backend” developer.

But that’s all an old story, a thing of the past, a bygone era, remnants of an old civilization…. (looks dreamily into the void). Alight, I might be exaggerating a tiny bit, but I am in no way wrong.

The emergence of Low Code and No-Code Tools

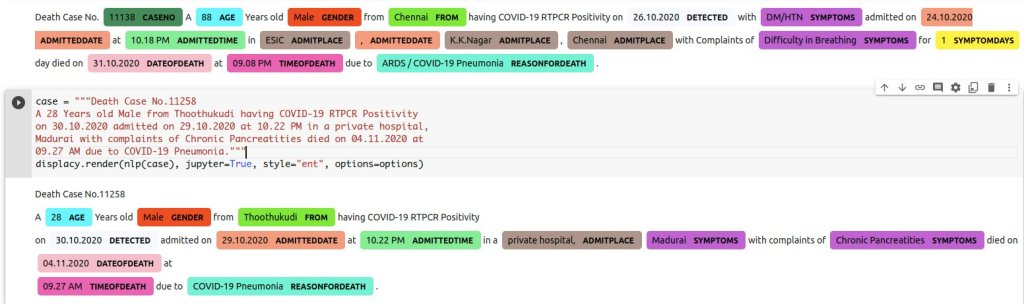

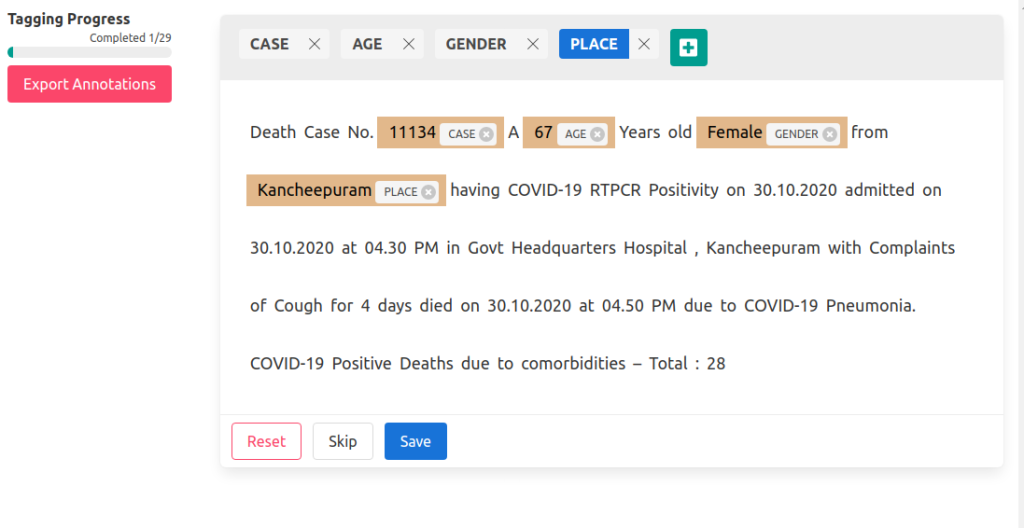

As with everything these days, I stumbled into the world of low-code or no-code tools due to COVID-19. When India had only a handful of cases and people were trying to build a website to track the pandemic, I built a web app using Python Django which quickly became irrelevant and costly to run.

Instead, a live dashboard was built using Google Sheets as the database and API. A simple HTML page with some React code became the frontend. It was fascinating to see the innovative way in which the entire thing was working. At one point I saw 89 people actively editing the sheet, updating data, and 100,000 others consuming it on the dashboard. It changed the perception of web development completely.

Future of Web Development

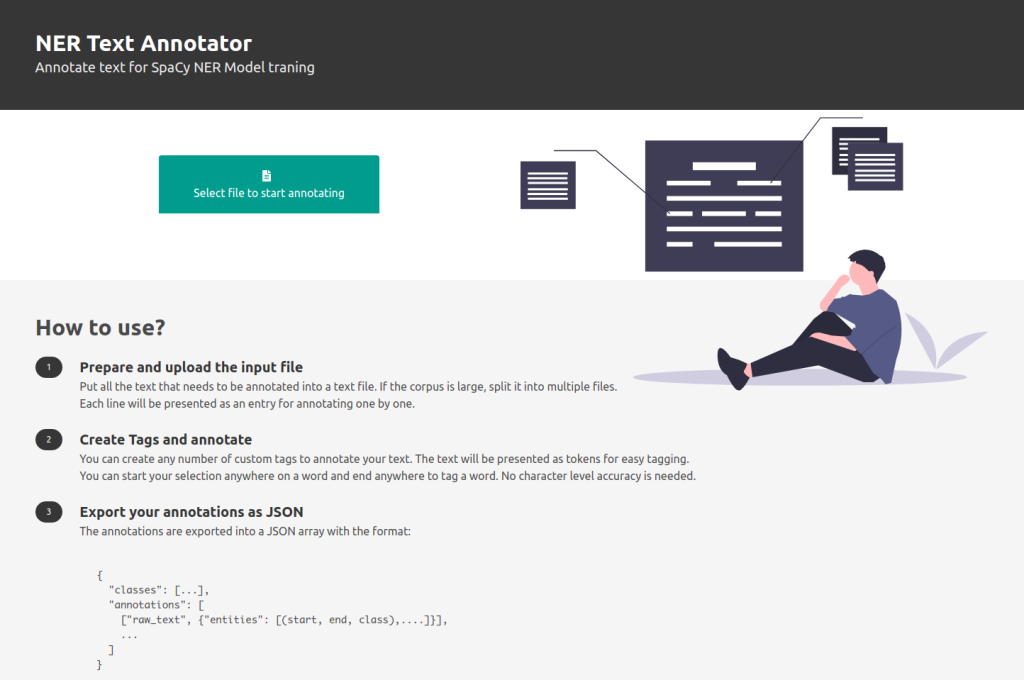

Ever since I have been intrigued by this notion of building things on the web without the traditional idea of database-backend-frontend. While all of those things might exist, it is abstracted away in a way you are not actively working with a setting up VMs, database server, compiling code – that sort of nuts and bolts.

https://nocodelist.co/ is a website that lists tools that allow one to do that. It has tools for almost any task conceivable.

- You want to draw the UI adjust colors and generate Angular code that you can just add business logic to – UI Bakery has you covered

- You won’t convert your Google Sheets into a Database with a REST API – there are at least 8 apps for that

- How about a drag and drop UI builder that you can directly hook into your database without a backend server? – AppSmith will let you do that

In each of the instances above, there is a lot of details that are abstracted away into the tool. Only the part which actually requires human decision making is left for the developers to decide.

Just as we moved from writing HTML by hand to building shadow DOMs using JavaScript, we might be moving from writing any JS to defining just the logic.

Conclusion

For a modern frontend developer, HTML is a second thought. The frameworks like React, Vue, Angular have created a way for us to think about the web in terms of components instead of HTML tags. We might soon be thinking about web apps in terms of user-flows while the frameworks will deal with the components.

You could even say, we already do and that I am late to realize it.